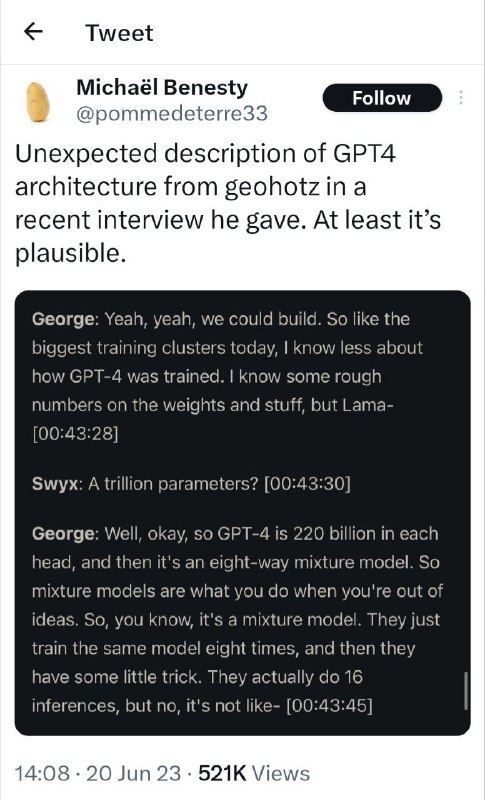

New update about architecture of GPT-4 📣

It is said to be a mixture of eight experts, each comprising 220 billion parameters. That brings it to a total of 1.760 trillion parameters!🤯

The rumor has been confirmed by Soumith Chintala, one of the creators of PyTorch.

In this context, the experts are just copies of the GPT model, each containing 220 billion parameters, which were trained separately and most likely on different data sets.

During inference, they use some clever tricks to decide which experts' answers should be provided to the user.

🏷️ #GPT4 #architecture

@ppprompt

It is said to be a mixture of eight experts, each comprising 220 billion parameters. That brings it to a total of 1.760 trillion parameters!🤯

The rumor has been confirmed by Soumith Chintala, one of the creators of PyTorch.

In this context, the experts are just copies of the GPT model, each containing 220 billion parameters, which were trained separately and most likely on different data sets.

During inference, they use some clever tricks to decide which experts' answers should be provided to the user.

🏷️ #GPT4 #architecture

@ppprompt