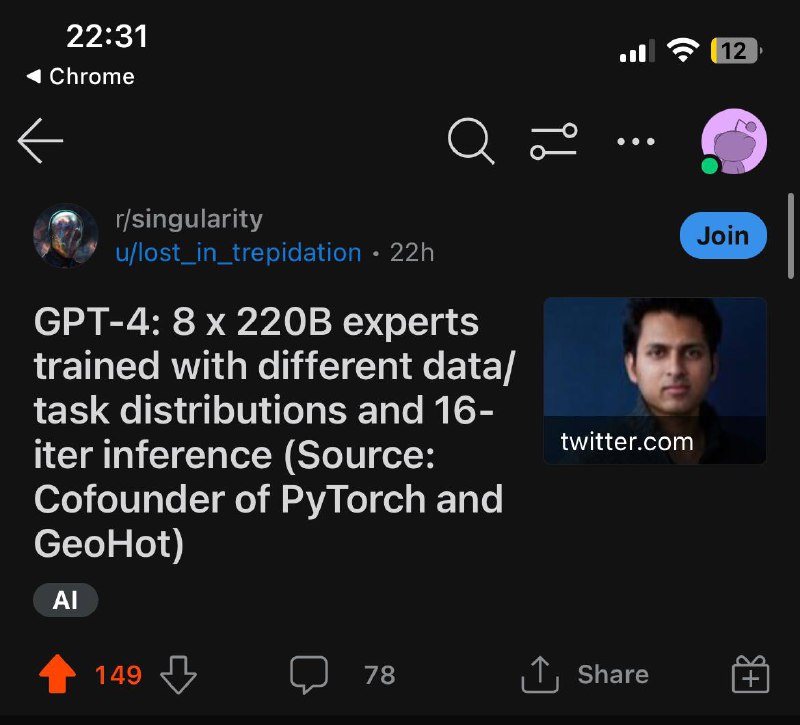

A pretty good theory (or a leaked info?) about the architecture of GPT4: it is 8 smaller models trained and mixed into one. So not a 1T model, but rather 8 x 220B models under one roof.

🔔 New update on this post

🏷️ #gpt4 #architecture

@ppprompt

🔔 New update on this post

🏷️ #gpt4 #architecture

@ppprompt